Appearance

Developer Quickstart

The Asemic comes with CLI app for setting up and maintaining your project. It is Git-friendly, low-code solution.

Connecting to Your Data

This guide will walk you through the process of connecting your data sources to Asemic. We'll cover the steps for the main supported data warehouses.

Setting Up Workspace

Once you have been registered with Asemic, you will have a workspace and a starting project set up.

Workspace can contain multiple projects, and each project is an independent entity, containing datasets of a single product. (At the moment, workspace and project will be set for you during onboarding)

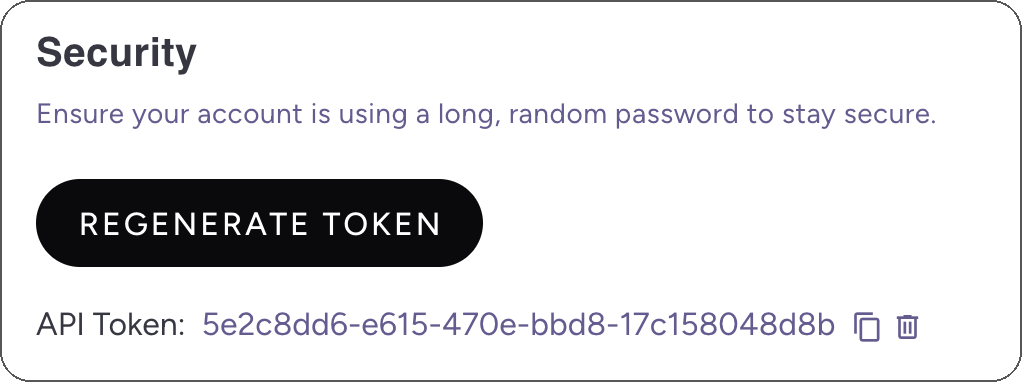

First go to Settings -> Profile in Asemic UI. We'll need API Token for the next step.

Asemic CLI Installation

You'll need to install the Asemic CLI tool, which is used for managing the semantic layer. Installation instructions:

macOS ARM

bash

curl -L -o asemic-cli https://github.com/Bedrock-Data-Project/asemic-cli/releases/latest/download/asemic-cli-macos-arm

chmod +x asemic-cli

sudo mv asemic-cli /usr/local/binmacOS x64

bash

curl -L -o asemic-cli https://github.com/Bedrock-Data-Project/asemic-cli/releases/latest/download/asemic-cli-macos-x64

chmod +x asemic-cli

sudo mv asemic-cli /usr/local/binUbuntu

bash

curl -L -o asemic-cli https://github.com/Bedrock-Data-Project/asemic-cli/releases/latest/download/asemic-cli-ubuntu

chmod +x asemic-cli

sudo mv asemic-cli /usr/local/binWindows

Windows is not directly supported, but the Ubuntu binary can be used with WSL (Windows Subsystem for Linux).

Post-installation

chmod +x asemic-cli sudo mv asemic-cli /usr/local/bin

Setting Up the Asemic CLI

You'll need to set up the Asemic CLI:

- Generate an API token from the Asemic Settings page

- Export the token to your environment:bash

export ASEMIC_API_TOKEN=<your_token_here> - Take note of your API ID, found in the projects list.

- Create a directory that will store your semantic layer config. Name the directory after your API ID.bash

mkdir {API_ID} && cd asemic-config - Run auth to authorize asemic to access your workspace and follow instructions for your specific database type.bash

asemic-cli config auth

Setting Up Connection

Big Query

To connect Asemic to your BigQuery database, you need to create a service account with the following roles:

- BigQuery Data Viewer

- BigQuery Job User

- BigQuery Data Editor (for the dedicated dataset where the data model will be created)

For detailed instructions on creating a service account, please refer to Google's support documentation.

Example:

bash

asemic-cli config auth

Enter your google billing project ID: gcp-warehouse-project

Enter path to your service account key (Should be generated on

google cloud console from a service account): /Users/demo/Downloads/sa.jsonNot Using Big Query?

Don't worry, we support almost all data warehouses with standard SQL interface. Check Connecting Data Sources for more examples.

Note: it is recommended to store your semantic layer config on version control, to facilitate collaboration. If using github, you can check the asemic demo example: https://github.com/Bedrock-Data-Project/bedrock-demo This repo uses github actions for automatic validation of pull requests, automatic push on merge to main branch and workflow for backfilling the entity model.

Setting up Semantic Layer

The Asemic semantic layer is primarily built to handle User Entity data model which consumes events generated by a User interacting with your online app. User Entity is defined by a set of properties (attributes describing the user) and KPIs are defined as aggregations of user properties.

Asemic assumes that data is tracked in one or more event tables, where each row represents an event performed by a user at a specific time. With event tables mapped in semantic layer, Asemic can generate dozens of industry-standard KPIs as a starting point.

Generating the User Entity Model

Run the Asemic CLI: Use the asemic-cli user-entity-model event command to start a wizard that will help you map a single event table. If your data is organized as one event per table, the process will resemble the following example:

bash

asemic-cli user-entity-model event

Enter full table name: gendemo.ua_login

Enter event name [Leave empty for ua_login]: login

Enter date column name [Leave empty for date]:

Enter timestamp column name [Leave empty for time]:

Enter user id column name [Leave empty for user_id]:

Datasource saved to /demo/userentity/events/login.ymlOr without the wizard, suitable for automation:

bash

asemic-cli user-entity-model event \

--date-column=date \

--event-name=login \

--table=gendemo.ua_login \

--timestamp-column=time \

--user-id-column=user-idIn case all your events are stored as subschemas in a single table, you can use the --subschema flag in the wizard. Here is an example:

bash

asemic-cli user-entity-model event --subschema

Enter full table name: gendemo.event_table

Enter the name of the column that indicates which subschema column is used. [Leave empty for event_name]:

Enter the value of event_name column for this event: level_started

Enter column name that contains parameters for this subschema: level_started

Enter event name [Leave empty for level_started]:

...

Datasource saved to /demo/userentity/events/level_started.ymlGenerate the Entity Model: Run asemic-cli user-entity-model entity to generate an initial set of properties and KPIs. This command will ask for name of the schema in your warehouse, which asemic will use to materialize tables needed for user entity model. For example, asemic will generate asemic.v1daily, asemic.v1active and several other tables. This can be edited later in config.yml file in your semantic layer directory.

Directory Structure

Once generated, the structure will contain three subfolders:

bash

Project name (your {API_ID})

├── events # Descriptions of user events.

| # It can be any table with rows identified by user ID, timestamp, date partition

| # and optional attributes.

├── kpis # KPIs are metrics that can be plotted on charts.

└── properties # Think of properties as columns that you can filter or group by.Properties

Properties are a powerful mechanism to define complex columns easily. There are several types of properties, each serving a specific purpose.

Here's a few examples. Check Settings Up Semantic Layer for more detailed instructions how to set up.

yaml

# sum of revenue field in payment_transaction_action

revenue_on_day:

data_type: NUMBER

can_filter: true

can_group_by: false

event_property:

source_event: payment_transaction

select: "{revenue}"

aggregate_function: sum

default_value: 0

# max level played on a given day

max_level_played:

data_type: INTEGER

can_filter: true

can_group_by: true

event_property:

source_event: level_played

select: "{level_played.level}"

aggregate_function: max

default_value: ~

# Users will have value of 1 if active on a date, otherwise 0

active_on_day:

data_type: INTEGER

can_filter: false

can_group_by: false

event_property:

source_event: activity

select: 1

aggregate_function: none

default_value: 0

payment_segment:

data_type: STRING

can_filter: true

can_group_by: true

computed_property:

select: '{revenue_lifetime}'

value_mappings:

- range: {to: 0}

new_value: Non Payer

- range: {from: 0, to: 20}

new_value: Minnow

- range: {from: 20, to: 100}

new_value: Dolphin

- range: {from: 100}

new_value: WhaleKpis

Kpis are aggregations of properties that can get plotted over time. Examples:

yaml

# number of daily active users

dau:

label: DAU

select: SUM({property.active_on_day})

x_axis:

date: {total_function: avg}

cohort_day: {}

retention:

select: SAFE_DIVIDE({kpi.dau} * 100, SUM({property.cohort_size}))

unit: {symbol: '%', is_prefix: false}

x_axis:

# date is not available as an axis for cohort metric

cohort_day: {}

# generated retention_d1, retention_d2, etc

retention_d{}:

select: SAFE_DIVIDE({kpi.dau} * 100, SUM({property.cohort_size}))

where: '{property.cohort_day} = {}'

unit: {symbol: '%', is_prefix: false}

x_axis:

date: {}

template: cohort_day

# reference other kpis

arpdau:

label: ARPDAU

select: SAFE_DIVIDE({kpi.revenue}, {kpi.dau})

unit: {symbol: $, is_prefix: true}

x_axis:

date: {}

cohort_day: {}Submitting semantic layer

After generating the semantic layer, it needs to be submitted to asemic. Before submitting, it is recommended to run asemic-cli config validate to validate the configuration. asemic-cli will dry run several queries to test your properties and kpis. After validate is successful, config should be submitted by using asemic-cli config push

Backfilling the semantic layer data model

As a final step, semantic layer needs to be backfilled. This can be done either by using asemic-cli (asemic-cli user-entity-model backfill --date-from='2024-08-23' --date-to='2024-08-25') or, if using github, runnning a backfill workflow (see Demo Project for an example).

Asemic materializes your physical data model for performance reasons and is expected to be integrated with your etl process to backfill the data as it becomes available.

This will generate the basic set of properties and metrics from your events. To get the most check Advanced Topics in the documentation.